This is the second post of my series about understanding text datasets. If you read my blog regularly, you probably noticed quite some posts about named entity recognition. In this posts, we focused on finding the named entities and explored different techniques to do this. This time we use the named entities to get some information about our data set. We use the “Quora Insincere Questions Classification” dataset from kaggle. In this competition, Kagglers will develop models that identify and flag insincere questions.

Load the data

import pandas as pd

import numpy as np

import matplotlib.pyplot as plt

plt.style.use("ggplot")

df = pd.read_csv("data/train.csv")

print(f"Loaded {df.shape[0]} samples.")

Loaded 1306122 samples.

First, let’s look at some of the questions.

print("\n".join(list(df.sample(10).question_text.values)))

What causes you to lose your hearing when you drink alcohol?

I just turned 14 and already had a growth spurt at 11, will I still grow taller?

Are Muslims terrorist?

How do you find the median of a list that won't fit in memory?

How good is SRM University for research, I heard it is very easy with money?

What is the best way to achieve self discipline?

How does Gmail determine what gets moved to (or out of) their Social, Promotions, and Forum filtering?

Where can I practice Java 8 stream API?

Does a UX Researcher need to be very good at drawing?

What were the reasons why labor unions formed?

We resample the data to make the named entity recognition faster.

n_samples = 10000

df_sample = df.sample(n_samples)

Detect the named entities using SpaCy

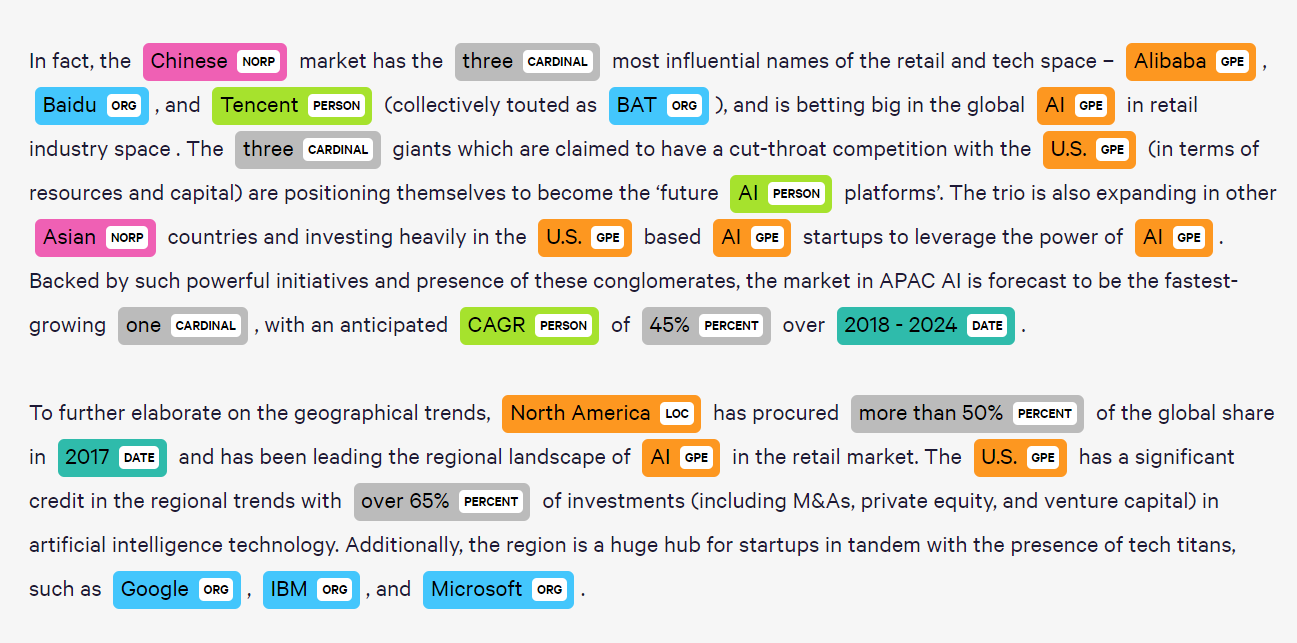

We use the pre-trained named entity tagger of the popular spaCy library. The models are trained on the OntoNotes 5 corpus and supports 18 named entity types. The list of supported types can be found here: https://spacy.io/usage/linguistic-features#entity-types

import spacy

from spacy import displacy

nlp = spacy.load('en', parse=False, tag=False, entity=True)

sentence = str(df_sample.iloc[0].question_text)

sentence_nlp = nlp(sentence)

# print named entities in article

print([(word, word.ent_type_) for word in sentence_nlp if word.ent_type_])

# visualize named entities

displacy.render(sentence_nlp, style='ent', jupyter=True)

[(GATE, 'GPE')]

ner_questions = [(nlp(s), t) for s, t in zip(df_sample.question_text, df_sample.target)]

Find the most common named entity types overall and by target class.

net_question = [([w.ent_type_ for w in s if w.ent_type_], t) for s, t in ner_questions]

# if no named entity found, add "None"

net_question = [(s, t) if s else (["None"], t) for s, t in net_question]

Flatten the namend entity types list.

ners = [ne for n in net_question for ne in n[0]]

from collections import Counter

ner_cnt = Counter(ners)

ner_cnt.most_common(10)

[('None', 4845),

('ORG', 3114),

('PERSON', 2204),

('GPE', 1967),

('DATE', 1559),

('NORP', 1047),

('CARDINAL', 823),

('WORK_OF_ART', 298),

('LOC', 254),

('TIME', 185)]

Group the named entity types by target class.

sincere_ners = [ne for n in net_question for ne in n[0] if not n[1]]

ner_cnt = Counter(sincere_ners)

ner_cnt.most_common(10)

[('None', 4652),

('ORG', 2882),

('PERSON', 1955),

('GPE', 1791),

('DATE', 1503),

('CARDINAL', 776),

('NORP', 716),

('WORK_OF_ART', 278),

('LOC', 216),

('TIME', 176)]

insincere_ners = [ne for n in net_question for ne in n[0] if n[1]]

ner_cnt = Counter(insincere_ners)

ner_cnt.most_common(10)

[('NORP', 331),

('PERSON', 249),

('ORG', 232),

('None', 193),

('GPE', 176),

('DATE', 56),

('CARDINAL', 47),

('LOC', 38),

('WORK_OF_ART', 20),

('LAW', 15)]

We see, the most common named entity type is “NORP” which represents “Nationalities or religious or political groups”.

Find the most common named entities overall and by class.

net_question = [([w.text for w in s if w.ent_type_], t) for s, t in ner_questions]

# if no named entity found, add "None"

net_question = [(s, t) if s else (["None"], t) for s, t in net_question]

ners = [ne for n in net_question for ne in n[0]]

ner_cnt = Counter(ners)

ner_cnt.most_common(10)

[('None', 4845),

('the', 284),

('India', 241),

('one', 132),

('Quora', 126),

('Trump', 120),

('year', 112),

('Indian', 105),

('-', 89),

("'s", 88)]

Group the named entities by target class.

sincere_ners = [ne for n in net_question for ne in n[0] if not n[1]]

ner_cnt = Counter(sincere_ners)

ner_cnt.most_common(10)

[('None', 4652),

('the', 255),

('India', 224),

('one', 128),

('year', 106),

('Quora', 105),

('years', 83),

('Indian', 82),

("'s", 80),

('first', 77)]

insincere_ners = [ne for n in net_question for ne in n[0] if n[1]]

ner_cnt = Counter(insincere_ners)

ner_cnt.most_common(10)

[('None', 193),

('Trump', 66),

('the', 29),

('Muslims', 28),

('Indians', 23),

('Indian', 23),

('Quora', 21),

('Muslim', 20),

('America', 20),

('Donald', 17)]

We notice, that a lot of the insincere questions are focusing on “Trump”, “Muslims” and “Indians”. So insincere questions seem to have racist content.

I hope this post gave you some idea about how to use named entity recognition to analyze and understand your text dataset. If you want to train your own named entity tagger, you should have a look at my post about Bert. Stay tuned for more posts about how to understand text.